System Design Question 10 – Stock Trading Platform for 100M Concurrent Users

Real-Time Market Data, Order Matching, Scalability, Fault Tolerance, and Low Latency

Building a large-scale stock trading platform that supports real-time price updates, millions of concurrent trades, and strict regulatory/transactional requirements demands careful consideration of consistency, latency, fault tolerance, and data integrity.

A Note to My Readers

If you’ve been a regular reader of this blog, you might have noticed that I no longer explicitly include logging and observability components in every design. That’s not an oversight — it's intentional.

In past articles, I’ve covered these aspects thoroughly. Including them again in every post makes the diagrams cluttered and distracts from the domain-specific services that are at the heart of each system. So, to give more space and clarity to core components, I’ve left them out here. However, if you’re preparing for interviews, always remember to include logging, monitoring, and observability in your answers — they’re critical.

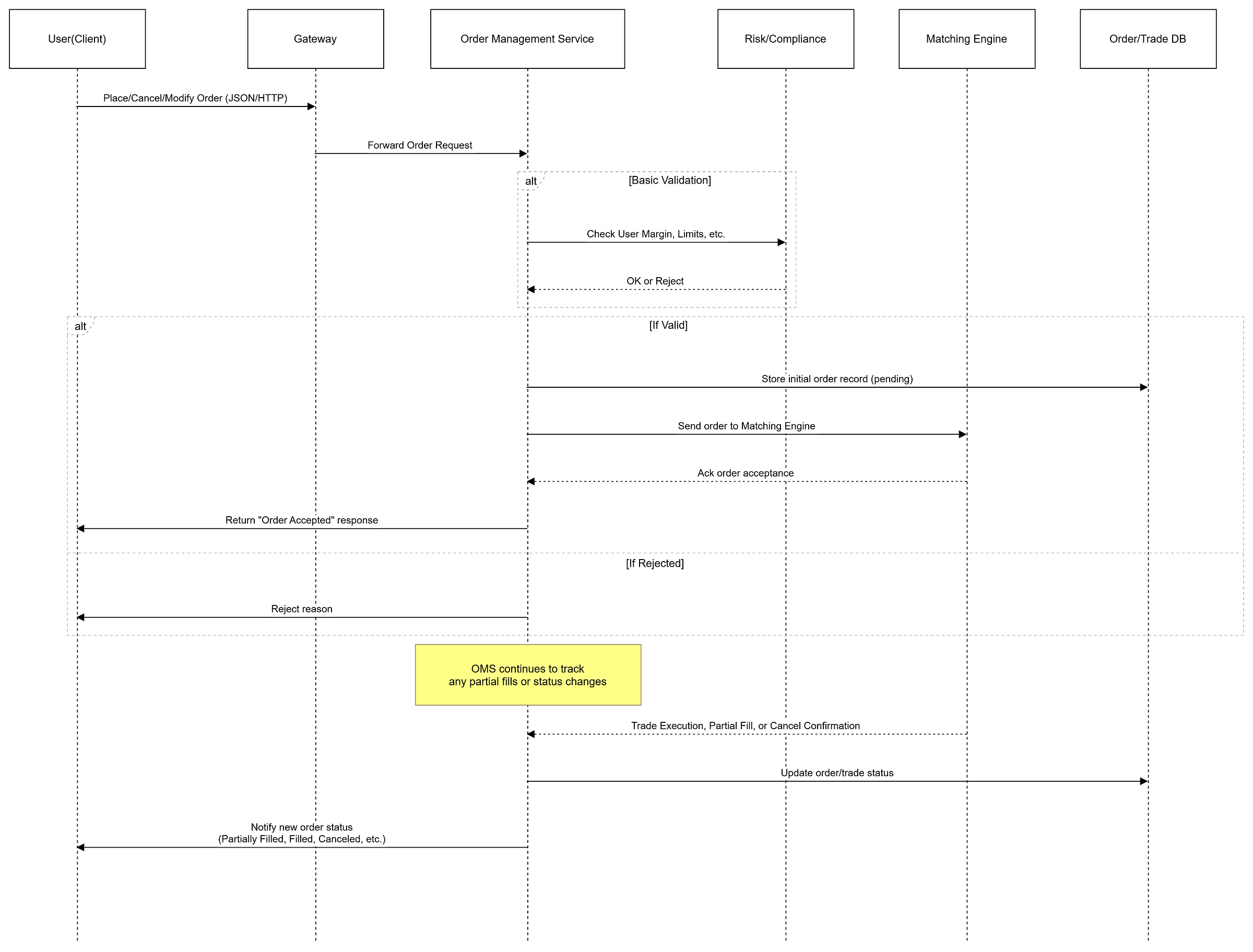

To enhance clarity, I’ve also started adding sequence diagrams and flow based diagrams — something many of you asked for. They provide a better understanding of service interactions and request flows, especially in complex architectures.

A challenge I’ve been facing recently is Substack’s content length limit. Platforms like Gmail truncate emails beyond a certain size. If you see a cutoff in your inbox, I recommend reading the full post on the Substack app or website for the complete experience.

I’m also working on a parallel deep-dive research doc on similar systems, which is more detailed than what I can fit into a single blog post. It’s currently live on Google Docs. If you’d like access or want me to do a design breakdown based on that idea, just drop a message.

As you can probably tell, writing these articles takes a lot of time and effort — not just to write, but to deeply research and verify the content. That’s why you don’t see multiple posts every week, and I really appreciate your patience.

Lastly, for those who signed up for my free System Design Demystified sessions, I plan to host them next weekend for both IST and PST time zones. With over 320 people signed up, I’ll be splitting the sessions into 5-6 batches to keep them effective and interactive.

Below Design questions are previously covered topics in this blog-

System Design of Airbnb Part1 - User, Listings, Search & Availability

System Design of Airbnb Part2 - Booking & Reservations, Payment, & Reviews & Ratings

System Design of Airbnb Part3 - Messaging, Notifications, Trust & Safety, and Customer Support

System Design of Airbnb User QnA post articles - Prevent Double Booking

System Design of Twitter - Most in depth article you could find

🚀 Promotion: Prep Smarter With Mock Interviews And Career Guidance – Get $75 Off and Free Resume Review!

If you're aiming for top tech roles at companies like Google, Microsoft, Meta, or leading fintechs — TechInterview.Coach is your go-to platform for 1:1 mock interviews and coaching from engineers who've been on the other side of the table.

🎯 Get personalized help with:

System design

Coding interviews

Behavioral rounds

Career strategy

🧠 Coaches include professionals from FAANG, Paytm, Stripe, and more. I am also available on that Platform, visit my profile. Ramesndra Kumar

🎁 Special Offer: Use coupon 75OFFFIRSTMONTH to get $75 off your first month

👉 Sign up here

1. Requirement Gathering

1.1 Functional Requirements (FR)

User Onboarding & Authentication

New users register via a standard process (email, phone, or other KYC processes depending on regulatory requirements).

Secure login with MFA (Multi-Factor Authentication) recommended.

Account Management & Portfolio

Users can view account balances, positions (stocks owned, quantity, average cost), and transaction history.

Funding/withdrawal methods (bank transfers, credit cards, etc.).

Real-time updates to portfolio when trades settle.

Market Data / Ticker Feeds

Real-time or near real-time price updates for stocks (top of book data, last trade, best bid/ask, depth of market if needed).

Streaming updates to clients (web, mobile) with minimal latency.

Order Placement & Execution

Users place orders (market, limit, stop-loss, etc.).

Orders must match against the order book in real time if a matching price is found.

Partial fills, cancellations, modifications.

Real-time feedback to the user on order status (accepted, filled, partially filled, rejected).

Trade Settlement & Clearing

Once trades are executed, the platform updates user positions and cash balances.

Integrations with clearinghouses or internal settlement logic.

Risk Management / Compliance

Basic risk checks before accepting orders (e.g., margin checks, position limits).

Regulatory reporting: track user identity, generate audit logs, etc.

Notifications & Alerts

Push/email/SMS alerts for order fills, price movements, margin calls, etc.

Configurable user preferences.

Analytics & Reporting

End-of-day statements, transaction history, profit/loss calculations.

Basic charting or advanced analytics for user insights (candlestick charts, volume trends, etc.).

1.2 Non-Functional Requirements (NFR)

Scalability & Low Latency

Handle up to 100M concurrent users receiving market data streams.

Latency from order placement to execution acknowledgment should be sub-50 ms in an ideal scenario.

Real-time updates with millions of messages per second (market data + user trades).

High Availability & Fault Tolerance

The platform must remain operational even if a data center fails.

Redundant systems for critical components (order matching, data feeds, etc.).

Data Integrity & Consistency

Trades must be processed in the correct order; double-spending or overselling must be prevented.

Strong consistency for account balances and positions after trade execution.

Security & Regulatory Compliance

Secure user data, transactions, personal info (KYC).

Meet regulatory requirements (FINRA, SEC, MiFID II, etc.) for trade logs and data retention.

Audit trails for all transactions.

Observability

Logging, metrics, and real-time monitoring to detect performance bottlenecks.

Alerting on latencies, order mismatches, or data inconsistencies.

1.3 Out of Scope

Algorithmic Trading Strategies: Specific user algorithms or high-frequency trading logic are beyond this design’s scope (though we provide a stable API).

Advanced Risk Modeling: Comprehensive risk engine with real-time margin checks is partially covered, but deep quant models are out of scope.

Payment Gateways: We assume integration with third-party payment processors/banks, not building a gateway from scratch.

2. BOE Calculations / Capacity Estimations

Let’s assume:

User Base: 100M concurrent users.

Orders per User: On an active trading day, not everyone trades frequently, but let’s assume up to 5% place orders each minute during peak times.

That could be 5M users trading in a peak minute.

Peak Order Throughput:

If each of those 5M users places ~1 order per minute, we get ~5M orders/minute → ~83k orders/sec.

Realistically, surges can happen around market open/close or major news, so it could spike to 2–3× that. Let’s plan for ~200k orders/sec peak.

Market Data Feeds:

Market data can be extremely high volume (thousands of price updates per second per stock).

For tens of thousands of stocks, real-time messages might reach millions of updates/second.

We’ll rely on specialized market data feed handlers to distribute only relevant updates to each user.

Storage:

Each executed trade record is small (a few hundred bytes).

If we have 200k trades/sec at peak, that’s 720M trades/hour. Over a 6.5-hour trading day, ~4.68B trades.

If each trade record is ~300 bytes, that’s ~1.4 TB/day just for raw trade execution data. Over a month, ~42 TB of just trade records (excluding logs, historical quotes, user data, etc.).

These estimates underscore the need for high-speed ingestion, robust real-time distribution, and large-scale storage.

3. Approach (High-Level Design)

3.1 Architecture Overview

We can divide the system into multiple core services:

Gateway / API Layer

Handles REST/WebSocket connections for user actions (login, place order, get market data streams).

Authenticates and routes requests to internal services.

Market Data Service

Consumes live feeds from exchanges, normalizes data, and publishes real-time price/quote updates to interested users.

Possibly uses a pub/sub system (Kafka, NATS) for high-volume streaming.

Order Management Service (OMS)

Accepts and validates user orders (risk checks, position checks).

Sends valid orders to the Matching Engine or external exchange.

Tracks order lifecycle (open, partially filled, filled, canceled).

Matching Engine

Maintains an order book for each stock, matching buy and sell orders in real time.

Typically scaled via partitioning by symbol or group of symbols.

Updates the Market Data Service upon trades, so price feeds remain current.

Portfolio/Accounts Service

Maintains user balances, positions.

Updates after trades settle (trade confirmations from OMS).

Ensures real-time reflection of cash/stock holdings.

Clearing & Settlement Service

Manages post-trade settlement processes, either in-house or via external clearinghouses.

Finalizes trades at T+2 or as required by local regulations.

Risk & Compliance Service

Performs risk checks in real time or near real time (margin checks, short selling constraints).

Logs all user actions for audit; monitors suspicious trading patterns.

Notification Service

Sends trade confirmations, account alerts, or system messages to users.

Integrates with email, SMS, or push notifications.

Logging & Monitoring

Collects logs, metrics, and traces for troubleshooting and real-time alerts.

3.2 Data Consistency vs. Real-Time Requirements

Order Execution: Must have strong consistency. The order matching process cannot afford to be eventually consistent—race conditions would break trades.

Market Data: Can be near real-time or best-effort real-time. Slight delays of milliseconds are generally acceptable, but we aim for sub-100 ms from the exchange.

Portfolio Balances: After a trade is executed, we must reflect changes quickly. This typically requires strong consistency in the final step (though partial eventual updates might be okay for read replicas).

3.3 Security & Privacy Considerations

Encryption in Transit: All connections (user to gateway, service to service) secured via TLS.

Authentication/Authorization: Role-based checks (e.g., normal user vs. admin) and multi-factor authentication for user logins.

PII Compliance: Store user personal data in encrypted form, follow GDPR/CCPA compliance if global.

4. Databases & Rationale

4.1 Order and Trade Store

Primary Use Case: Persisting orders and executed trades for compliance, audit, and user queries.

Chosen Database: A highly available NewSQL solution CockroachDB(I am planning to write a new blog about this).

Why: Strong transactional guarantees needed. ACID properties help ensure no double entry or data loss for trades.

4.2 Market Data Store

Primary Use Case: High-throughput ingestion of real-time quotes, storing for short-term retrieval or analytics.

Chosen Database: Time-series DB (e.g., InfluxDB) or specialized solutions (kdb+ used in finance).

Why: Optimized for time-series data, quick writes, fast queries for historical charts or real-time aggregation.

4.3 User Accounts & Portfolio

Primary Use Case: Maintaining balances, positions, historical transactions.

Chosen Database: A robust relational DB or a strongly consistent NoSQL with transaction support.

Why: Critical financial data that requires consistency and transactions.

4.4 Caching Layer

Primary Use Case: Caching hot data (e.g., top of book quotes, user session info, partial order book).

Chosen Database: In-memory cache like Redis.

Why: Sub-millisecond lookups for real-time data, ephemeral store for quick retrieval.

5. APIs

5.1 Gateway / API Layer

POST /auth/login

Payload: credentials, MFA code (if applicable).

Returns: session token, user details.

POST /orders/place

Payload: { symbol, side, quantity, orderType, price (if limit), etc. }

Returns: orderId, status=“ACCEPTED” or error.

GET /market-data/subscribe?symbol=XXX (WebSocket or streaming API)

Opens a stream of real-time price data for symbol=XXX.

GET /portfolio

Returns: current positions, PnL, available cash.

5.2 Order Management Service

POST /oms/validateOrder

Payload: { userId, symbol, quantity, orderType, price }

Performs basic checks, returns either “VALID” or “REJECT.”

POST /oms/match

Payload: { orderId, symbol, side, quantity, price }

Forwards to Matching Engine or aggregator if multiple engines.

PATCH /oms/cancelOrder

Payload: { userId, orderId }

Attempts cancellation if order not fully filled.

5.3 Matching Engine

Typically, the Matching Engine doesn’t expose a classic HTTP API but uses internal, high-throughput protocols or direct message bus (e.g., FIX, gRPC, or custom TCP).

MatchRequest: { orderId, side, quantity, symbol, price }

TradeExecution: { tradeId, matchedQuantity, fillPrice, timestamp }

5.4 Portfolio/Accounts Service

POST /portfolio/update

Payload: { userId, symbol, changeInQuantity, newCashBalance }

Ensures ACID update to user’s account.

GET /portfolio/history?userId=xxx

Returns transaction/trade history for compliance and user display.

6. Deep Dive into Core Services

A. Gateway / API Layer

Overview and Purpose

Frontline for user interactions, both REST (HTTP) and streaming (WebSocket) for data.

Manages user sessions, rate limits, and authenticates requests.

Architecture and Data Flow

Connection Establishment:

Users connect via HTTPS or WebSockets. The Gateway validates tokens with the Auth Service.

Routing:

Order requests → OMS. Market data subscription → Market Data Service.

Session Tracking:

Might store ephemeral session data in Redis (sessionId → userId).

Corner Cases

Burst of logins at market open: Horizontal scaling behind a load balancer.

Expired tokens: If a token is invalid mid-session, forcibly close the connection or require re-auth.

Rate limiting: Prevent malicious or accidental spikes from a single user or IP.

B. Market Data Service (MDS)

Purpose and Scope

A stock trading platform hinges on accurate, timely market data: the real-time feed of stock prices, quotes, volumes, order-book depth, and trade executions. The Market Data Service (MDS) ingests raw data from external exchanges or from an internal Matching Engine, normalizes that data, and then republishes it to internal systems and end-user clients. For a platform with 100 million concurrent users, the scale of data dissemination is massive, often reaching millions of data points per second.

Data Ingestion

Exchange Feeds

Large stock exchanges provide proprietary data channels—some use protocols like FIX/FAST (Financial Information eXchange), ITCH, or proprietary binary feeds.

The MDS will maintain persistent connections to multiple exchange servers to ensure redundancy. If one feed has latency or drops, failover is seamless.

Data typically includes top-of-book information (best bid and ask), last trade price, order book depth (levels 1-5 or more), and real-time ticker updates.

Internal Matching Engine Feeds

If the platform itself hosts a Matching Engine for certain symbols, then trades and updates generated internally must be fed back into MDS.

This loop ensures that the MDS has a unified, up-to-date view of both external and internal markets (for example, if the platform crosses trades internally or offers alternative trading system functionality).

Normalization & Processing

Symbol Mapping: Exchanges label instruments in different ways, and the MDS must map them to a canonical identifier.

Aggregated Order Book: In some designs, MDS might aggregate data from multiple venues so users can see consolidated quotes. This becomes a best-bid-offer feed.

Ticker Consolidation: When multiple updates arrive in the same millisecond for a symbol, MDS might combine them into a single event to reduce downstream message load.

Distribution / Publishing

Pub/Sub Architecture: A common approach is to push updates into a high-throughput messaging system (e.g., Kafka, NATS, or RabbitMQ). Services (OMS, user-facing Gateways) subscribe to relevant topics.

Push to End Users: The MDS might push data to user devices via WebSockets or other streaming APIs. For 100M concurrent users, this demands a highly scalable distribution layer with advanced filtering or partial data push (only symbols a user follows).

Snapshot + Incremental Updates: Typically, a client first retrieves a snapshot of an order book or last trade data, then applies incremental updates (like a stream of deltas) to stay synchronized.

Performance & Scaling

Throughput: Millions of messages per second is not uncommon during peak market volatility. Horizontal scaling with partitioned topics or multiple MDS instances is crucial.

Latency: Sub-100 millisecond end-to-end latency (from exchange feed to user) is often the target. Co-locating MDS with exchange data centers can reduce network hops.

Caching & Batching: Caching recent updates in memory allows fast retrieval by new subscribers, and batching messages can reduce network overhead when feeding extremely high-volume data.

Error Handling & Corner Cases

Feed Drop: If the exchange feed goes down, MDS must failover to a secondary feed or degrade gracefully.

Data Integrity: Corrupted data or out-of-order updates must be checked with sequence numbers. The MDS discards or re-requests missing updates when needed.

Load Surges: Market open/close or major news can cause intense bursts. Auto-scaling or pre-provisioned capacity is mandatory.

C. Order Management Service (OMS)

Role and Responsibilities

The OMS is the orchestrator of the order lifecycle. It receives user orders, validates them against trading rules (e.g., margin, compliance), and forwards valid orders to the Matching Engine. It also processes acknowledgments, partial fills, cancellations, and rejections. In addition, the OMS often provides a single interface for all external exchange routes if the platform routes orders to multiple venues.

Order Lifecycle

Order Creation

A user sends a request to place an order (market, limit, stop, etc.) via the Gateway.

The OMS performs preliminary checks: user authentication, account status (frozen?), position limits, margin sufficiency.

If checks pass, the order is tagged with a unique ID, timestamp, and assigned to the correct Matching Engine or external route.

Validation & Risk Checks

More advanced checks might be done in near real-time: does the user have enough buying power? If short selling, is the user eligible?

Compliance checks might block certain trades under watchlists or restricted lists.

Routing & Acknowledgement

The OMS either places the order into an internal queue for the local Matching Engine or routes it to an external exchange.

Once the Matching Engine or exchange acknowledges the order, the OMS updates the status to “Accepted.” The user is informed.

Order Updates

Orders can be partially filled if the entire quantity cannot be matched at once. The OMS records partial executions and updates the quantity remaining.

If the user requests a cancellation or modification, the OMS sends that instruction to the Matching Engine or exchange. The final result (e.g., “Canceled,” “Rejected,” “Modified”) flows back to the user.

State Management

In-Memory vs. Persistent: For speed, the OMS might track active orders in memory (e.g., a distributed in-memory data grid). Completed orders are then persisted to a relational or NoSQL store for audit.

Event Sourcing: Some trading systems adopt event sourcing to log every state change in an append-only store. This ensures an immutable audit trail. Replays can reconstruct the entire sequence if needed.

Scalability & Resilience

Load Balancing: Multiple OMS nodes run in parallel, each handling a subset of user sessions or symbols.

Shard by Symbol or User: Orders for symbols A–F might go to OMS instance #1, G–L to #2, etc. Alternatively, user-based partitioning can keep a user’s orders on the same node for simpler concurrency.

Failover: If an OMS node fails, an identical standby should take over seamlessly. The system must avoid losing any orders in-flight.

Corner Cases

Double-Submitting Orders: If a user’s client retries a request, the OMS must detect duplicate order IDs to prevent duplication.

Session Drops: The user might disconnect mid-order. The OMS still processes the order logically. The user can reconnect to see updated status.

Rapid Cancels & Modifications: High-frequency traders may modify or cancel orders frequently. The OMS should handle large volumes of such requests without lag.

D. Matching Engine

Core Function

A Matching Engine is the beating heart of any exchange-like system: it holds an order book for each security (symbol), matching buy and sell orders in real time based on price/time priority. The engine ensures fair matching: older or better-priced orders match first, trades occur at the best possible price, and the resulting trades are final.

Data Structures & Algorithms

Order Book

Typically maintained as two sorted lists (or priority queues): one for buy orders (descending by price) and one for sell orders (ascending by price).

For extremely high speeds, specialized data structures like skip lists, balanced trees, or splay trees might be used to achieve O(log n) insertion and retrieval. Some implementations prefer in-memory arrays (for known symbol sets) with direct indexing for microsecond-level performance.

Matching Logic

When a new buy order arrives, the Matching Engine checks the best sell orders to see if the buy price is >= sell price. If yes, a trade is executed.

Partial fills occur if the incoming order quantity is larger than the available quantity at the best price. This process repeats until either the order is fully filled or the best opposite side price no longer meets the incoming order’s constraints.

Trade Generation

Each successful match creates a trade record: {tradeId, symbol, price, quantity, timestamp, buyerId, sellerId}.

This trade record is published to the OMS, Market Data Service, and Portfolio/Accounts Service for updates.

High-Throughput Architecture

Partition by Symbol: Since each symbol’s order book is distinct, the Matching Engine can be scaled out by assigning sets of symbols to different engine instances. This avoids concurrency conflicts across symbols.

In-Memory Execution: Matching typically happens in memory for sub-millisecond speed, with results appended to a durable log for recovery.

Low-Latency Networking: Co-located in data centers near major exchanges or using specialized protocols can reduce round-trip times significantly.

Risk & Consistency

Atomic Transactions: The engine must guarantee that no two orders for the same symbol are matched simultaneously in conflicting ways. A single-threaded approach per symbol or a fine-grained locking scheme ensures correctness.

Failover: If a Matching Engine instance goes down, the platform might need to freeze trading in those symbols briefly while a backup instance loads the last known state. Some advanced designs replicate the in-memory book to a standby in near real-time.

Corner Cases

Crossing Orders: A buy and sell arrive at exactly the same time. Deterministic tie-breaking (based on sequence ID or timestamp) is crucial.

Market Orders: These skip the price check and fill at the current best available price. If insufficient liquidity is present, the order might fill partially.

Price Volatility: Rapid changes can cause orders to be filled at widely varying prices within milliseconds. The Matching Engine must handle surges without stalling.

E. Portfolio/Accounts Service

Purpose and Responsibilities

After trades are executed, user positions (stocks held) and cash balances must be updated to reflect the new reality. The Portfolio/Accounts Service keeps track of all user financial data, from real-time positions to realized/unrealized profit and loss.

Data Model

User Balance: The available cash (or margin) for each user.

Positions: For each user and symbol: quantity owned, average cost basis, realized PnL, etc.

Transaction Log: Record of all trades, deposits, withdrawals, dividends, corporate actions (splits, merges).

Workflow with Executed Trades

Trade Notification

When the Matching Engine completes a trade, it forwards a trade event to the OMS, which in turn notifies Portfolio/Accounts. Alternatively, the Matching Engine can publish directly to the Portfolio Service’s queue.

Position Update

The service increments (for a buy) or decrements (for a sell) the user’s position.

If it’s a partial fill, only the filled quantity is considered.

The cost basis might change if it’s an additional buy or if the user sold some shares from a previous lot.

Cash Balance Adjustment

A buy reduces the user’s cash, plus any trading fees.

A sell increases the user’s cash, minus fees/taxes if applicable.

If margin is involved, the service checks margin requirements and updates collateral or loan amounts.

Consistency & Transactions

ACID Compliance: Each update to user balances is typically done within a transactional boundary to avoid double spending or negative positions. A robust SQL or NewSQL database is common.

Locking / Concurrency: If a user tries to place multiple concurrent orders in different symbols, the system can handle those in parallel as long as the total available cash is not exceeded. Some designs keep a “hold” on the user’s funds once an order is placed to ensure covering the potential fill.

Reporting & Analytics

Historical Statements: The Portfolio Service might store years of transaction history for compliance and user access.

Real-Time PnL: Advanced systems recalculate PnL in real time as market prices change. This can be done by subscribing to MDS for symbol quotes, then recalculating each user’s net position.

Tax Calculations: Some regions require real-time or end-of-year tax form generation, which often necessitates tracking cost basis with FIFO, LIFO, or average price rules.

Scaling & Performance

Read/Write Patterns: At peak times, a large volume of trade executions triggers near-simultaneous writes. The service must handle bursts of updates.

Caching Strategies: Frequently accessed data (e.g., the user’s current portfolio) can be cached in memory or a distributed cache for fast retrieval. Writes still go to the authoritative database to preserve integrity.

Sharding by User: Splitting the user data across multiple database shards ensures that any single node’s load remains manageable.

Corner Cases

Partial Fills & Cancelled Orders: Ensuring the final fill quantity is reflected accurately, especially if multiple partial fills come in quick succession.

Corporate Actions: Stock splits or spin-offs can drastically change user holdings. The service must handle these recalculations systematically.

Margin Calls: If the user’s margin ratio falls below a threshold due to adverse price movements, the system may automatically liquidate positions or freeze new orders until the user funds the account.

7. Addressing Non-Functional Requirements (NFRs)

A. Scalability & High Availability

Partitioning

Matching Engines partitioned by symbol range or market sector.

Shard databases for user portfolios if needed.

Load Balancing

Gateway behind global load balancers.

Matching Engines behind an internal routing layer that knows which engine handles which symbols.

Geo-Redundancy

Multiple data centers across regions.

Active-active or active-passive setups to handle failovers.

B. Performance & Low Latency

In-Memory Caches

Redis for quick session lookups, order book snapshots.

Low-Latency Networking

Possibly co-locate servers in financial data centers (e.g., near NYSE, NASDAQ, etc.).

High-Performance Messaging

Use specialized protocols (FIX, binary formats) for order routing and trade confirmations.

C. Security & Regulatory Compliance

Encryption

TLS for all client connections.

Audit Logs

Store all order requests, modifications, trades in tamper-evident logs.

KYC & AML

Integrate user identity checks, suspicious activity detection.

Data Retention

Keep historical trades for the legally required period (5–7 years or more, depending on jurisdiction).

D. Reliability & Fault Tolerance

Redundant Matching Engines

If one instance fails, a secondary can take over (hot standby or partitioned load).

Database Replication

Synchronous replication for critical data.

Disaster Recovery

Offsite backups, cross-region replication.

E. Observability & Monitoring

Centralized Logging

Real-time logs for user requests, order states, system metrics.

Alerts & Dashboards

Monitor latencies, queue depths, error rates, feed delays.

Tracing

Distributed tracing for debugging slow order placements.

8. Bringing It All Together

By splitting the system into specialized microservices—Gateway, Market Data, OMS, Matching Engine, Portfolio/Accounts, Risk/Compliance, Notifications, and Clearing—we can achieve:

Massive Scale: Handle 100M concurrent connections, with capacity for hundreds of thousands of orders/second.

Real-Time Updates: Push near-instant price changes to all interested users via streaming protocols.

Strong Consistency & Reliability: Order matching and portfolio updates happen atomically, preventing double-spend or inconsistent trades.

High Availability: Multi-region deployments, partitioned matching engines, and redundant data stores ensure continuous operation.

Regulatory Compliance: Detailed audit logs, KYC checks, and data retention policies integrated from the start.

Performance: Use of in-memory data, efficient messaging protocols, and co-location with exchange data centers keeps latencies low.

This architecture mirrors real-world trading platforms used by discount brokers and high-frequency trading firms, ensuring robust performance, stringent data integrity, and the flexibility to integrate additional features (e.g., options trading, margin loans, advanced analytics) as the platform evolves.