System Design Question 9 - WhatsApp-like Messaging Service

Real-Time Chat, Group Messaging, Presence, Scalability, Durability, and Low Latency

Building a real-time messaging platform that supports one-to-one and group chats, message delivery/read receipts, and high availability requires thoughtful consideration of data consistency, message fan-out, and real-time push mechanisms. Below is a holistic design approach using the same format as requested.

I’m launching a free 3 classes live course to demystify what’s truly expected in system design interviews with the experience in mentoring and real interviews. This Free course will be available in both IST and PST time zones to accommodate different schedules. If you're interested in joining, you can enroll in this google form.

Below Design questions are previously covered topics in this blog-

System Design of Airbnb in 4 Parts

Part1 - User, Listings, Search & Availability

Part2 - Booking & Reservations, Payment, & Reviews & Ratings

Part3 - Messaging, Notifications, Trust & Safety, and Customer Support

User QnA post articles - Prevent Double Booking

System Design of Twitter - Most in depth article you could find

🚀 Promotion: Prep Smarter With Mock Interviews And Career Guidance – Get $75 Off and Free Resume Review!

If you're aiming for top tech roles at companies like Google, Microsoft, Meta, or leading fintechs — TechInterview.Coach is your go-to platform for 1:1 mock interviews and coaching from engineers who've been on the other side of the table.

🎯 Get personalized help with:

System design

Coding interviews

Behavioral rounds

Career strategy

🧠 Coaches include professionals from FAANG, Paytm, Stripe, and more. I am also available on that Platform, visit my profile. Ramesndra Kumar

🎁 Special Offer: Use coupon 75OFFFIRSTMONTH to get $75 off your first month

👉 Sign up here

1. Requirement Gathering

1.1 Functional Requirements (FR)

User Registration & Authentication

Basic user onboarding with phone-based verification or some credential system.

Maintain an address book or friend list (often contacts are auto-detected via phone number, but we’ll keep it generic).

One-to-One Messaging

Real-time sending and receiving of text (and possibly media).

Show “online/offline/last seen” statuses if desired.

Mark message states: Sent, Delivered, Read.

Group Chats

Create groups with multiple participants.

Admin roles: add/remove participants.

Message fan-out to all group members.

Delivery and read receipt tracking per user in the group (sometimes simplified to “2 ticks if everyone read it,” but the system can track individually).

Message History & Durability

Store messages on the server for a certain duration (or indefinitely).

Ensure messages can be re-downloaded if a user reinstalls or logs in on a new device.

Message Ordering

Typically, messages need a consistent ordering in the conversation.

Handle concurrency if both participants are online simultaneously.

User Presence & Status

Show “online” or “typing…” status (optional but common in messaging apps).

Search & Archiving

Let users search past messages or view archived chats.

Media Attachments (Optional)

Send images, audio, video. (Often requires a separate media store.)

1.2 Non-Functional Requirements (NFR)

Scalability & Low Latency:

Handle millions of connections concurrently. Average end-to-end latency should be under 200 ms for real-time experience.Reliability & Durability:

Messages must not be lost. Even if the user’s device is offline, the system holds them for later delivery.Consistency:

At least once or exactly-once delivery semantics. Must avoid duplicates or ensure deduplication is in place.Global Availability:

Data center presence in multiple regions, ensuring low-latency connections for local users.Fault Tolerance:

If one region is down, traffic fails over to another with minimal disruption.Security:

Typically end-to-end encryption. For system design at scale, focus on securing channels, stored data encryption (at least server side), and user data privacy.Observability:

Logging, metrics, tracing for real-time detection of latencies or dropped messages.

1.3 Out of Scope

Detailed Payment or Monetization (ads, etc.).

Sophisticated spam/fraud detection beyond basic blocking/reporting.

Advanced analytics or big data machine learning recommendations.

2. BOE Calculations / Capacity Estimations

Let’s assume:

User Base: 100 million daily active users (DAU).

Average Sent Messages/User: ~50 messages per day (some might send more, others less).

Daily Message Volume: 100M × 50 = 5 billion messages/day.

Peak Throughput: Usually 10–20% of daily traffic in peak hour. So 500 million – 1 billion messages in an hour → ~140k – ~280k messages/sec at absolute peak.

Storage:

Each message might be ~200 bytes (text only).

Daily Volume: 5B × 200 bytes = 1 TB/day (just raw text – media attachments excluded).

Over a month, ~30 TB of message data if stored indefinitely.

Connections:

If many users are concurrently online, we might see 10–15 million active connections at peak.

These numbers inform us that we need high throughput message ingestion, low-latency fan-out for groups, and robust storage for billions of messages monthly.

Figure 1: Complete Design For Whatsapp

3. Approach “Think-Out-Loud” (High-Level Design)

3.1 Architecture Overview

Use a microservices or service-oriented approach, dividing responsibilities:

Gateway / Connection Manager

Handles persistent connections (WebSockets, MQTT, or a custom TCP protocol).

Authenticates and routes incoming/outgoing messages.

Chat Service

Core logic for one-to-one and group messaging.

Manages message states (sent, delivered, read).

Coordinates with presence and group membership data.

Group Management Service

Creates groups, adds/removes participants, group settings.

Fan-out logic can be here or in Chat Service.

Presence Service

Tracks which users are online/offline, last seen timestamps.

Distributes presence info (if needed) to user contacts or chats.

Message Store

Durable storage of all messages for X days/months.

Potentially leverages NoSQL (Cassandra) or a message queue pipeline (Kafka) + cold storage (S3/HDFS) for older data.

User Service

Profile data, phone number or ID-based authentication, contact lists.

Logging and Monitoring Service are covered in many previous designs.

Communication Patterns:

For real-time messaging: WebSocket or a specialized messaging protocol (like MQTT).

For internal services: mixture of gRPC/HTTP or async message buses (Kafka/RabbitMQ).

3.2 Data Consistency vs. Real-Time Requirements

Message Delivery: Typically “at least once” with deduplication if needed. Strong consistency for message state (e.g., delivered vs. read).

Presence: Accept eventual consistency. If presence is a few seconds out of sync, it’s not catastrophic.

Group Chat: Must ensure ordered delivery if possible, or at least an ordering key so clients can order messages.

3.3 Security & Privacy Considerations

End-to-End Encryption: Typically a client-level encryption scheme (Signal Protocol for WhatsApp).

From the server design standpoint, we store only encrypted payloads.At-Rest Encryption: If storing messages, ensure encryption keys are well managed.

Access Control: Only conversation participants can retrieve messages belonging to them.

4. Databases & Rationale

4.1 Message Store

Primary Use Case:

Storing billions of short messages daily, with a TTL (time-to-live) if only storing for 90 days, for example.

Chosen Database:

NoSQL (Cassandra) or a similar column-family store.

Excellent write performance, linear scalability, can handle large volumes of data.

Flexible schema for message data: partitioned by

(chat_id, time_bucket)or(user_id, chat_id, message_id).

Why:

Cassandra is horizontally scalable, offers tunable consistency, and is commonly used in messaging at scale (e.g., Netflix, Instagram).

4.2 Group Membership & User Directory

Primary Use Case:

Quick lookups to see which users are in a group.

Who is an admin, etc.

Chosen Database:

Relational DB (e.g., Postgres) or a lightweight NoSQL.

If group membership changes frequently, a dynamic store like a NoSQL (MongoDB) might be simpler.

If we need strong consistency for membership changes, a relational store with transactions can be beneficial.

4.3 Presence Service

Primary Use Case:

Tracking online/offline status, last seen times.

Chosen Database:

Often in-memory (Redis or ephemeral store).

Presence data changes frequently and typically does not need to be stored long term.

Possibly store last seen time in a persistent DB for display, but ephemeral presence states can live in Redis.

4.4 Delivery Notification Data

Primary Use Case:

Keeping track of delivered/read states.

Chosen Database:

Could share the same store as the message data or a separate short-lived store (Redis) for quick lookups.

5. APIs

5.1 Gateway / Connection Manager

WebSocket or MQTT connect

Validates user token, establishes a session for real-time messages.

Disconnect

On app close or inactivity, triggers presence offline update.

5.2 Chat Service

POST /chat/sendMessage

Payload:

{ fromUserId, toUserId or groupId, messageContent }Returns:

messageId, status=“SENT”

POST /chat/ackDelivery

Payload:

{ userId, messageId }Mark the message as delivered to

userId.

POST /chat/ackRead

Payload:

{ userId, messageId }Mark the message as read by

userId.

GET /chat/history?chatId=xxx

Returns: a list of messages for that chat (with paging).

5.3 Group Management Service

POST /groups/create

Payload:

{ groupName, adminUserId, participantIds }

PATCH /groups/{groupId}/addUser

Payload:

{ userId }

PATCH /groups/{groupId}/removeUser

Payload:

{ userId }

5.4 Presence Service

POST /presence/update

Payload:

{ userId, status=ONLINE|OFFLINE, timestamp }

GET /presence/{userId}

Returns:

{ status, lastSeen }

6. Deep Dive into Core Services

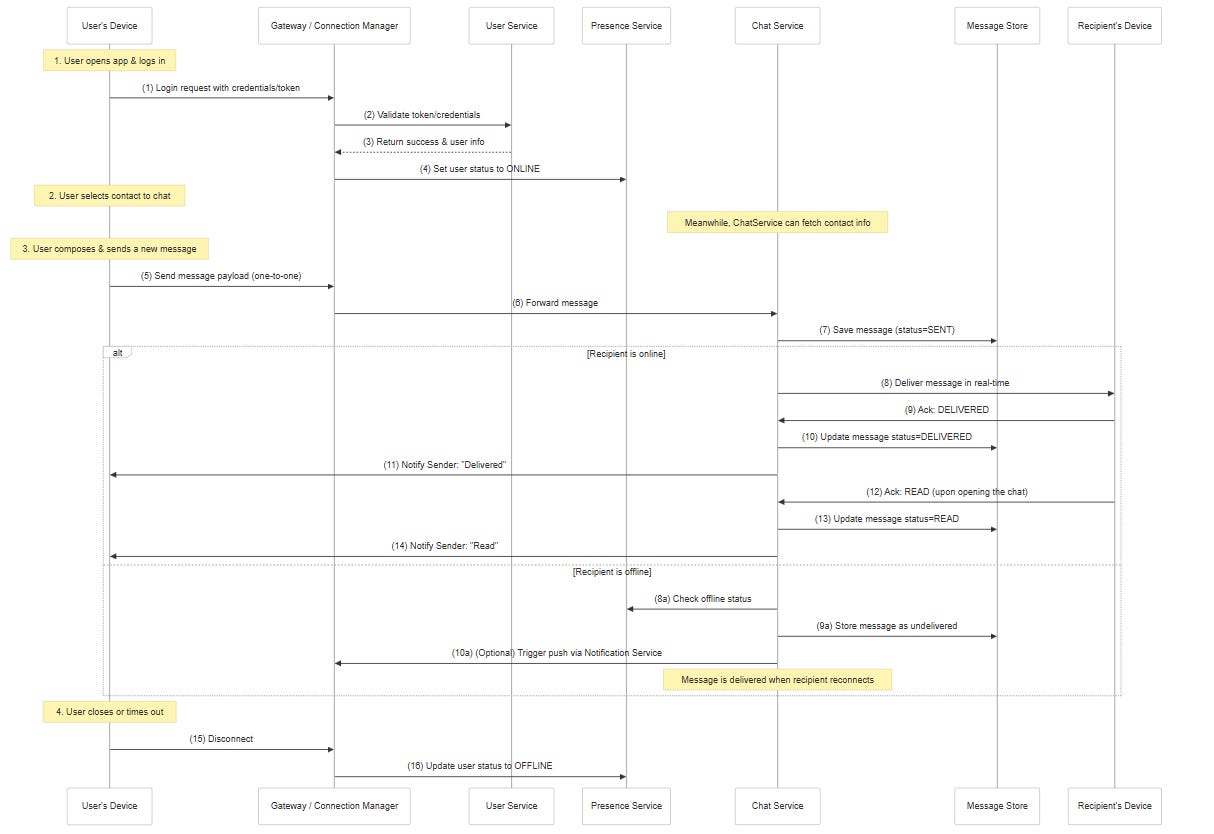

Figure 2: Complete chat System sequence diagram.

A. Gateway / Connection Manager

The Gateway (or Connection Manager) is the front-line service responsible for establishing and maintaining persistent connections with client devices. In a WhatsApp-like application, we want real-time, bi-directional communication, typically achieved via WebSockets or a custom TCP-based protocol (e.g., MQTT).

The Gateway handles the following tasks:

Authentication & Authorization

When a client (mobile app or web client) attempts to connect, the Gateway validates the user’s credentials (e.g., a token or session ID).

Session Management

Keeps track of each user’s active connections, typically storing them in memory or a fast in-memory data store (like Redis).

Routing Messages

For inbound messages (from user devices to the server), the Gateway routes them to the internal Chat Service or Group Management Service.

For outbound messages (from server to devices), the Gateway uses the existing WebSocket (or similar) sessions to push data in real time.

Presence Updates

Notifies the Presence Service that a user is online/offline or idle, enabling “last seen” or “online” statuses.

The Gateway is connected to:

User Service (to verify tokens)

Chat & Group Management Services (to deliver inbound chat messages)

Presence Service (to update presence changes)

Notification Service (sometimes, if fallback push is needed)

Logging & Monitoring (for storing connection logs, metrics)

Architecture and Data Flow

Connection Establishment

A user opens the messaging app. The app establishes a WebSocket connection to a load-balanced endpoint that points to a cluster of Gateway nodes.

On connect, the app sends an authentication token.

The Gateway node validates this token by calling the User Service or an authorization microservice. If valid, a session is created in memory (e.g.,

sessionId -> userId).

Maintaining Persistent Connections

After authentication, the user’s device pings periodically or sends heartbeats so the Gateway knows the user is still connected.

If heartbeats stop (due to app closure or network issues), the Gateway cleans up the session and signals the Presence Service that the user went offline.

Message Ingress

When the user sends a message, the app packages it into a JSON object (or binary protocol) and sends it over the WebSocket.

The Gateway receives the packet, checks if the user is authorized to perform this action, and then forwards it to the Chat Service.

The Gateway does minimal logic on the content—its primary job is message forwarding and session management.

Message Egress

The Chat Service or Group Management Service determines which recipients need to receive a message.

It returns either a direct response or uses a push pipeline to inform the Gateway which user IDs (and corresponding session IDs) need the message.

The Gateway uses its in-memory session map to find active connections for those recipients and sends the message down each open socket.

Corner Cases and Potential Solutions

Dropped Connections / Poor Network

Corner Case: A user’s connection might repeatedly drop because of poor mobile data or Wi-Fi.

Solution: Use robust reconnect logic in the client. The Gateway should handle partial sessions gracefully—mark the user offline after a short timeout, and once the user reconnects, the Gateway re-establishes the session.

High Throughput / Load Spikes

Corner Case: Suppose tens of millions of users connect simultaneously (e.g., a global event).

Solution: Horizontal Scaling of Gateway nodes behind a load balancer. Store ephemeral session data in a distributed cache like Redis or a shared consistent store so that any node can route messages for a particular user.

Routing Messages to the Wrong User

Corner Case: If the Gateway’s session mapping is corrupted, messages may get delivered to the wrong session.

Solution: Strict session validation, request-level authorization, and user-specific encryption keys so that even if a route is mistaken, the wrong user can’t decrypt the message.

Token Expiry / Re-authentication

Corner Case: Users might have invalid tokens or tokens that expire mid-connection.

Solution: The Gateway periodically re-verifies tokens or listens for revocation events from the User Service. If the token is invalid, the session is closed, forcing a re-login.

Presence Sync Delays

Corner Case: A user toggles between online/offline rapidly, resulting in presence update thrashing.

Solution: Debounce presence updates. The Gateway might hold changes for a few seconds before broadcasting them, ensuring stable presence signals.

Through these mechanisms, the Gateway remains a stateless (or minimally stateful) front door that is easy to scale, resilient to failures, and able to serve as the real-time bridge between clients and backend chat logic.

B. Chat Service & Group Management Service (Combined)

Overview and Purpose

In a WhatsApp-like system, the Chat Service and Group Management Service form the heart of messaging logic:

Chat Service:

Handles the send, receive, and acknowledgment flow for one-to-one and group messages.

Persists messages to the Message Store for durability.

Manages “delivery” and “read” states, updating the sender accordingly.

Group Management Service:

Creates and manages groups (add/remove participants, group naming, admin roles).

Coordinates with the Chat Service to perform fan-out of messages to all group participants.

The Chat Service is the “engine” that processes messages, while the Group Management Service ensures we know who to deliver group messages to and who has permissions to do so.

Chat & Group Management connect to:

Gateway / Connection Manager (inbound user messages, outbound notifications)

Presence Service (to check who is online and possibly show “typing” or presence info)

Message Store (for durable saving of messages)

Notification Service (to issue push if recipients are offline)

User Service (to confirm user identities, retrieve phone number or profile info)

Logging & Monitoring (for auditing and metrics)

Architecture and Data Flow

Sending a Message (One-to-One)

User device → Gateway → Chat Service.

The Chat Service validates the request (is the sender allowed to message the recipient?).

The Chat Service writes the message to the Message Store with a status of “SENT.”

The Chat Service attempts to deliver immediately. If the recipient is online, it routes the message ID to the Gateway, which pushes to the recipient’s active session.

Figure 3: One on one chat sequence diagram.

Group Chat Fan-Out

User device → Gateway → Chat Service.

The Chat Service calls the Group Management Service to fetch all participant IDs. (Alternatively, the Chat Service might have a cached copy of group membership for fast access.)

For each participant, the Chat Service does the same “write to store, then deliver” workflow. This step is called fan-out.

If any participant is offline, the Chat Service notifies the Notification Service to send a push alert.

Delivery & Read Acknowledgments

When a recipient’s device receives a message, it sends a “delivery ack” back.

The Chat Service updates the message state in the Message Store or in an in-memory “message state” cache.

When the recipient opens the chat, a “read ack” is sent, changing the state to “READ.” The Chat Service notifies the sender that the message has been read.

Group Creation & Membership Changes

The Group Management Service receives requests to create groups, add users, remove users, or set an admin.

Updates are stored in a membership table or document store.

For large groups, changes must be handled carefully to avoid partial updates (e.g., transaction or event-driven approach to ensure data consistency).

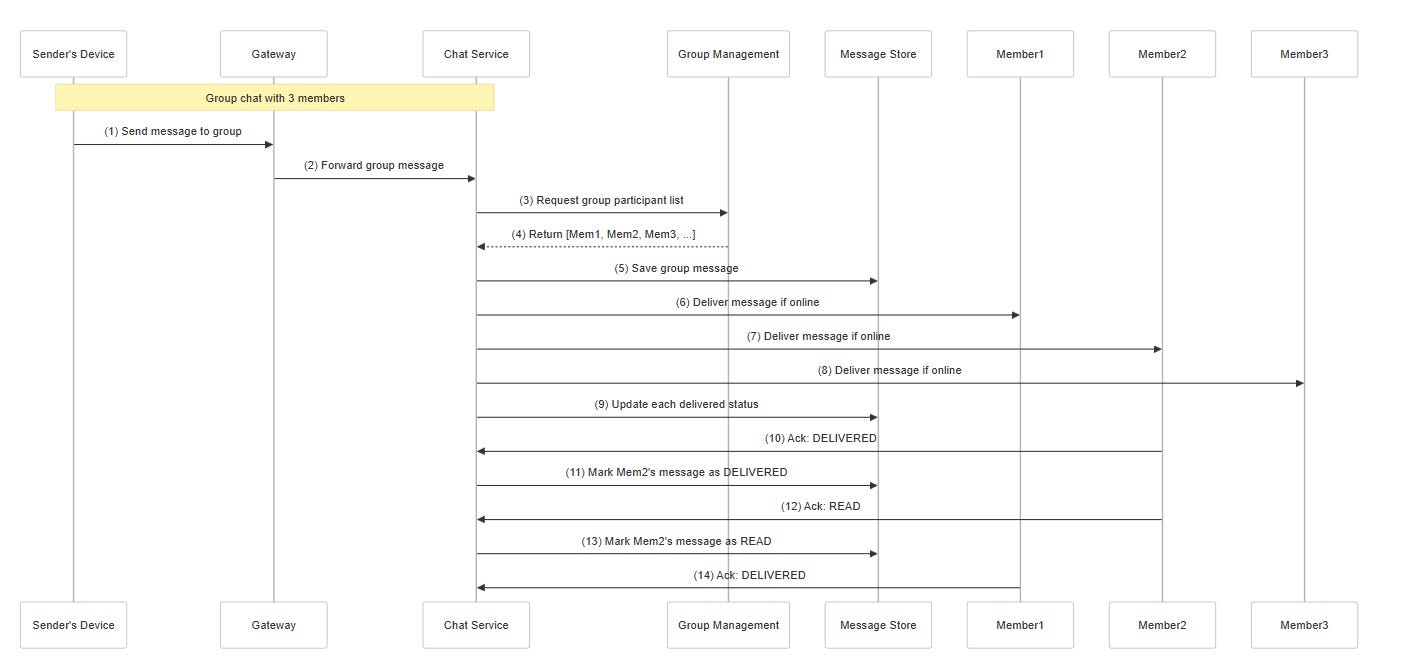

Figure 4: Group chat sequence diagram.

Internal Components

Message Dispatcher: A sub-module inside Chat Service that orchestrates the path from message ingestion to final delivery.

Ack Handler: Updates states (SENT → DELIVERED → READ).

Group Admin Logic: Inside Group Management: checks if the user performing an add/remove action is an admin.

Metadata Cache: Possibly an in-memory store to quickly retrieve group membership and user chat settings.

Corner Cases and Potential Solutions

Very Large Groups

Corner Case: Groups with thousands of participants can create a “fan-out storm” when a new message arrives.

Solution: Use “fan-out at read” (store message once, each participant retrieves upon query) or carefully batch pushes to the Gateway. Some systems also rely on a pub/sub layer (e.g., Kafka) to handle large-scale broadcast.

Duplicate Messages

Corner Case: If a user’s device resends the same message ID due to a network glitch.

Solution: Idempotent message IDs. The Chat Service checks if

messageIdalready exists in the store, ignoring duplicates.

Message Ordering

Corner Case: If the Chat Service processes message #2 before message #1 due to concurrency.

Solution: Use a monotonically increasing sequence number or a timestamp with a tiebreak. The client can reorder messages in the chat view if needed.

Group Admin Conflicts

Corner Case: Two admins remove or add participants simultaneously, causing race conditions.

Solution: Use a database transaction or versioning approach. The Group Management Service can also implement optimistic concurrency (check the version of group membership before applying changes).

Offline / Online Sync

Corner Case: A user was offline for a week, then rejoins. They need all missed group messages.

Solution: The Chat Service retrieves messages from the Message Store for the user’s offline window. The user’s client can sync those messages upon reconnection.

Through these mechanisms, the combined Chat & Group Management functionality ensures a robust messaging experience, from small personal chats to large community groups.

C. Presence Service

Overview and Purpose

The Presence Service tracks whether each user is online, offline, or possibly idle. It can also store the user’s “last seen” timestamp and indicate “typing” status in a one-to-one or group conversation.

In one line, Presence Service connects to:

Gateway / Connection Manager (to receive online/offline events in real time)

Chat Service (to optionally display presence to other users, or handle “typing…” signals)

Logging & Monitoring (for presence updates)

Architecture and Data Flow

Online / Offline Detection

The Gateway triggers an event (

presence.update) whenever a user connects, disconnects, or if a connection times out.The Presence Service receives this event, updates the user’s status in an in-memory store like Redis, or an in-process data structure if it’s small scale.

Last Seen Updates

When a user goes offline, the Gateway includes a timestamp in the

presence.updateevent.The Presence Service writes

lastSeento a persistent store. This data can be polled or requested by other services to show “last seen at 2:15 PM.”

Typing Indicator

The Chat Service or the user’s device sends “typing on/typing off” signals to the Presence Service (or sometimes direct to the Chat Service, which then routes to recipients).

The Presence Service can store these ephemeral statuses short-term in memory. The recipients can poll or subscribe for these updates in real time.

Broadcasting Presence

If the design calls for it, the Presence Service can broadcast presence changes to the user’s friends or group members. For example, if user A comes online, user B sees “A is online.”

Alternatively, each client can query the Presence Service or Chat Service whenever it needs an update.

Corner Cases and Potential Solutions

Unstable Networks

Corner Case: A user’s mobile device might flap between online/offline states multiple times in a short period (entering a tunnel, etc.).

Solution: Implement a small debounce or threshold. The service only updates presence after a stable state is confirmed (e.g., user is offline for at least 5 seconds).

Ghost Sessions

Corner Case: The server believes a user is still online because it never received a disconnect event (for instance, the phone lost power abruptly).

Solution: Timeouts/heartbeats. If no ping from the user’s client for X seconds, the Presence Service auto-sets them offline.

Privacy Settings

Corner Case: Some users don’t want to display their presence or last seen times.

Solution: The Presence Service can store per-user privacy preferences. If “lastSeenHidden” is enabled, the service doesn’t share last seen to other users.

Load Surges

Corner Case: Millions of presence changes could arrive in real time if a large user base experiences an outage or a reconnect event.

Solution: Scale horizontally with an in-memory store like Redis Cluster. The Presence Service can also batch or queue updates in a distributed messaging system (e.g., Kafka) to handle spikes.

Typing Flood

Corner Case: If a group has 500 participants, constant “User X is typing” updates can become noisy.

Solution: Rate limit “typing” events. The Presence Service might suppress redundant “typing on” signals if they occur too frequently.

Figure 5: Presence Service Sequence Diagram

By managing ephemeral statuses in memory and carefully storing only essential data (last seen) in a database, the Presence Service remains highly performant and provides an enhanced real-time feel.

D. Message Store

Overview and Purpose

The Message Store is a critical backend component ensuring that messages are durable and retrievable. In a WhatsApp-like system, messages may be deleted from the server after delivery (as WhatsApp originally did), or stored for extended periods to support multi-device sync. Modern chat apps increasingly store messages to let users retrieve their chat history across devices.

Core responsibilities of the Message Store:

Persist Messages at high velocity and volume (potentially billions of daily writes).

Retrieve Messages for conversation history or offline sync.

Handle TTL (Time to Live) or archiving if older messages are purged.

Support partial updates (delivery/read states) or store them in an auxiliary table.

In one line, the Message Store connects to:

Chat Service (reads/writes message data)

Group Management Service (might query message data if needed)

Analytics/BI (for usage metrics, though might be separate)

Logging & Monitoring (to track DB performance)

Typical Database Choice

NoSQL (e.g., Cassandra) is often used due to:

High Write Throughput: Cassandra can scale horizontally, handling large numbers of writes with low latency.

Tunable Consistency: The system can choose a consistency level (e.g., QUORUM or LOCAL_QUORUM) that balances performance and data correctness.

Partitioning: Data can be partitioned by user ID or chat ID, distributing load across many nodes.

Alternatively, MongoDB or HBase might be used. The key is being able to handle huge volumes of data while providing quick lookups.

Data Model

A possible Cassandra schema:

TABLE messages (

chat_id TEXT,

message_id UUID,

sender_id TEXT,

recipient_id TEXT, // or a repeated field for group recipients

content TEXT, // or BLOB if encrypted

timestamp TIMESTAMP,

status TEXT, // "SENT", "DELIVERED", "READ"

PRIMARY KEY (chat_id, timestamp, message_id)

) WITH CLUSTERING ORDER BY (timestamp DESC);chat_id: For one-to-one chat, it could be a concatenation of user IDs. For group chat, a unique group ID.

message_id: Uniquely identifies each message.

status: Optionally stored here or in a separate table to reduce write amplification.

Read Flow

When a user opens a chat, the Chat Service queries the Message Store:

SELECT * FROM messages WHERE chat_id = :chatId ORDER BY timestamp DESC LIMIT 50;This fetches the latest messages in descending time order. The client can page backward for older messages if needed.

Write Flow

The Chat Service calls:

INSERT INTO messages (chat_id, message_id, sender_id, content, timestamp, status) VALUES (...);If there’s a separate table or a separate column for read/delivery states, it might be updated with another statement.

Corner Cases and Potential Solutions

Hot Partitions

Corner Case: A single group or user is extremely active, saturating the partition that holds

(chat_id = "very_popular_group").Solution: Data modeling strategies such as bucketing by time (e.g., partition by

(chat_id, date)) to distribute writes. Also, ensure enough replication and node capacity.

High Read Latencies

Corner Case: If many users try to fetch large chat histories simultaneously, read performance might degrade.

Solution: Implement caching of recent messages in Redis. Or replicate data to a specialized search system (Elasticsearch) for advanced queries.

Message Deletion / Expiration

Corner Case: The app implements ephemeral messages that vanish after X days or even minutes.

Solution: Use Cassandra’s TTL feature or run a regular cleanup job. For compliance reasons, ensure no data lingers beyond the user’s chosen policy.

Multi-DC Replication

Corner Case: The messaging platform is global, with data centers in the US, Europe, and Asia. A user in Asia sends a message, but the main cluster is in the US.

Solution: Local DC writes with eventual replication to other DCs. The Chat Service in Asia writes to the local Cassandra cluster. The data eventually replicates across continents asynchronously, keeping local writes fast.

Encryption Requirements

Corner Case: End-to-end encryption means the server can’t read the content. Only the encrypted blob is stored.

Solution: Store the ciphertext as a BLOB in the

contentfield. Metadata likesender_id,recipient_id, andtimestampremain unencrypted for indexing or retrieval. The keys are on user devices.

Resending vs. Retries

Corner Case: If the Chat Service tries to insert a message but fails. Could lead to duplicates if it retries.

Solution: Use idempotent writes. The Chat Service checks if

message_idalready exists before inserting.

Scaling and Maintenance Considerations

Capacity Planning: For billions of messages, the cluster must have enough nodes to handle IOPS and store data.

Repair and Compaction: Cassandra requires regular repair tasks to keep replicas in sync, and compaction to optimize data on disk.

Monitoring: Track read/write latencies, tombstones, replication lags.

7. Addressing Non-Functional Requirements (NFRs)

A. Scalability & High Availability

Connection Manager: Deploy many stateless Gateway nodes behind a load balancer (e.g., HAProxy/Nginx).

Global Regions: Multiple data centers, each with a Cassandra cluster. Use data center replication to keep user data close to home region.

Partitioning & Sharding: Chat data is partitioned to avoid hot spots.

Auto-Scaling: For high message volumes, spin up more Chat Service instances, Kafka brokers (if used), etc.

B. Performance & Low Latency

WebSocket or MQTT: Keeps latency low for real-time pushes.

In-Memory presence, read receipts.

Local Caches: For frequently accessed data (recent chats).

Async Workflows: Non-critical tasks like logging or analytics run out-of-band.

C. Security & Privacy

Encryption in Transit: TLS for all connections.

End-to-End Encryption: Clients handle message encryption; server stores only encrypted blobs.

Access Controls: Only recipients can retrieve message content.

D. Reliability & Fault Tolerance

Multi-Region Redundancy: If one region is down, route traffic to another.

Replication: Cassandra replication factor >1 ensures data durability.

Message Queues: If using Kafka for ephemeral queueing, have cluster replication.

Graceful Degradation: If presence or read receipts fail, the system still sends messages.

E. Observability & Monitoring

Centralized Logging: Possibly store only essential info to manage scale.

Dashboards & Alerts: Real-time visibility into message throughput, latency, error rates.

Tracing: Distributed tracing (OpenTelemetry, Jaeger) for debugging slow message deliveries.

8. Bringing It All Together

By decomposing the platform into specialized services—Gateway, Chat, Group Management, Presence, Message Store, Notification, Logging/Monitoring—we achieve:

Massive Scale: Handle billions of daily messages with low latency.

Fault Tolerance: Multi-region, replicated data stores, load balancing.

Real-Time User Experience: WebSocket connections ensure instantaneous message delivery.

Durable Storage: Cassandra-like DB for message persistence and efficient retrieval of conversation history.

Feature Rich: Group chats with membership management, read receipts, push notifications, presence indicators.

Global Accessibility: Data center partitioning, geo-replication for local speed, offline push for remote/inactive devices.

This design aligns with proven large-scale messaging systems (like WhatsApp, WeChat, Telegram). While real-world implementations add encryption complexities and advanced spam controls, the core architecture here emphasizes reliability, horizontal scalability, and real-time messaging.

Hi, Thanks

In case of multiple web socket servers ( 1:1 either via group or private) :

u1 → GW1 , u2 → GW2 , u1 → send → u2 or chat server → u1.

are we maintaining user : server mapping ( then calling via some sync way) or how are we doing that ? ( in outbound ). or we have multiple queues (or topic in kafka) for each server :? , If yes what about if any server get fails something ?

considering having ~300k peak qps.